11 Extending a SOA Domain to Oracle Service Bus

This chapter describes the procedures for extending the domain to include Oracle Service Bus.

This chapter contains the following sections:

-

Section 11.1, "Overview of Adding Oracle Service Bus to a SOA Domain"

-

Section 11.2, "Enabling VIP5 on SOAHOST1 and VIP6 on SOAHOST2"

-

Section 11.4, "Disabling Host Name Verification for the WLS_OSBn Managed Servers"

-

Section 11.5, "Configuring Oracle Coherence for the Oracle Service Bus Result Cache"

-

Section 11.6, "Configuring a Default Persistence Store for Transaction Recovery"

-

Section 11.10, "Configuring Oracle HTTP Server for the WLS_OSBn Managed Servers"

-

Section 11.11, "Setting the Front End HTTP Host and Port for OSB_Cluster"

-

Section 11.12, "Validating Access Through Oracle HTTP Server"

-

Section 11.13, "Enabling High Availability for Oracle DB, File and FTP Adapters"

-

Section 11.14, "Configuring Server Migration for the WLS_OSB Servers"

-

Section 11.15, "Backing Up the Oracle Service Bus Configuration"

11.1 Overview of Adding Oracle Service Bus to a SOA Domain

This section provides an overview of adding Oracle Service bus to an SOA domain. Table 11-1 lists and describes to high-level steps for extending a SOA domain for Oracle Service Bus.

Table 11-1 Steps for Extending a SOA Domain to Include Oracle Service Bus

| Step | Description | More Information |

|---|---|---|

|

Enable VIP5 on SOAHOST1 and VIP6 on SOAHOST2 |

Enable a virtual IP mapping for each of these hostnames on the two SOA Machines. |

Section 11.2, "Enabling VIP5 on SOAHOST1 and VIP6 on SOAHOST2" |

|

Run the Configuration Wizard to Extend the Domain |

Extend the SOA domain to contain Oracle Service Bus components |

|

|

Disable Host Name Verification for the WLS_OSBn Managed Server |

If you have not set up the appropriate certificates for hostname verification between the Administration Server, Managed Servers, and Node Manager, disable host name verification. |

Section 11.4, "Disabling Host Name Verification for the WLS_OSBn Managed Servers" |

|

Configure Oracle Coherence for the Oracle Service Bus Result Cache |

Use unicast communication for the Oracle Service Bus result cache. |

Section 11.5, "Configuring Oracle Coherence for the Oracle Service Bus Result Cache" |

|

Configure a Default Persistence Store for Transaction Recovery |

To leverage the migration capability of the Transaction Recovery Service for the servers within a cluster, store the transaction log in a location accessible to a server and its backup servers. |

Section 11.6, "Configuring a Default Persistence Store for Transaction Recovery" |

|

Propagate the Domain Configuration to the Managed Server Directory in SOAHOST1 and to SOAHOST2 |

Oracle Service Bus requires some updates to the WebLogic Server start scripts. Propagate these changes using the pack and unpack commands. |

|

|

Start the Oracle Service Bus Servers |

Oracle Service Bus servers extend an already existing domain. As a result, the Administration Server and respective Node Managers are already running in SOAHOST1 and SOAHOST2. |

|

|

Validate the WLS_OSB Managed Servers |

Verify that the server status is reported as Running in the Admin Console and access URLs to verify status of servers. |

|

|

Configuring Oracle HTTP Server for the WLS_OSBn Managed Servers |

To enable Oracle HTTP Server to route to Oracle Service Bus console and Oracle Service Bus service, set the WebLogicCluster parameter to the list of nodes in the cluster. |

Section 11.10, "Configuring Oracle HTTP Server for the WLS_OSBn Managed Servers" |

|

Set the Front End HTTP Host and Port for OSB_Cluster |

Set the front end HTTP host and port for Oracle WebLogic Server cluster. |

Section 11.11, "Setting the Front End HTTP Host and Port for OSB_Cluster" |

|

Validating Access Through Oracle HTTP Server |

Verify that the server status is reported as Running. |

Section 11.12, "Validating Access Through Oracle HTTP Server" |

|

Enable High Availability for Oracle File and FTP Adapters |

Make Oracle File and FTP Adapters highly available for outbound operations using the database mutex locking operation. |

Section 11.13, "Enabling High Availability for Oracle DB, File and FTP Adapters" |

|

Configure Server Migration for the WLS_OSB Servers |

The high availability architecture for an Oracle Service Bus system uses server migration to protect some singleton services against failures. |

Section 11.14, "Configuring Server Migration for the WLS_OSB Servers" |

11.1.1 Prerequisites for Extending the SOA Domain to Include Oracle Service Bus

Before extending the current domain, ensure that your existing deployment meets the following prerequisites:

-

Back up the installation - If you have not yet backed up the existing Fusion Middleware Home and domain, Oracle recommends backing it up now.

To back up the existing Fusion Middleware Home and domain run the following command on SOAHOST1:

tar -cvpf fmwhomeback.tar ORACLE_BASE/product/fmw tar -cvpf domainhomeback.tar ORACLE_BASE/admin/domain_name/aserver/domain_name

These commands create a backup of the installation files for both Oracle WebLogic Server and Oracle Fusion Middleware, as well as the domain configuration.

-

You have installed WL_HOME and MW_HOME (binaries) on a shared storage and they are available from SOAHOST1 and SOAHOST2.

-

You have already configured Node Manager, Admin Server, SOA Servers and WSM Servers as described in previous chapters to run a SOA system. You have already configured Server migration, transaction logs, coherence, and all other configuration steps for the SOA System.

11.2 Enabling VIP5 on SOAHOST1 and VIP6 on SOAHOST2

The SOA domain uses virtual hostnames as the listen addresses for the Oracle Service Bus managed servers. Enable a virtual IP mapping for each of these hostnames on the two SOA Machines, (VIP5 on SOAHOST1 and VIP6 on SOAHOST2), and correctly resolve the virtual hostnames in the network system used by the topology (either by DNS Server, hosts resolution).

To enable the virtual IP, follow the steps described in Section 3.5, "Enabling Virtual IP Addresses for Administration Servers." These virtual IPs and VHNs are required to enable server migration for the Oracle Service Bus Servers. Server migration must be configured for the Oracle Service Bus Cluster for high availability purposes. Refer to Chapter 9, "Server Migration" for more details on configuring server migration for the Oracle Service Bus servers.

11.3 Running the Configuration Wizard on SOAHOST1 to Extend a SOA Domain to Include Oracle Service Bus

In this step, you extend the domain created in Chapter 9, "Extending the Domain for SOA Components" to contain Oracle Service Bus components. The steps reflected in this section would be very similar if Oracle Service Bus was extending a domain containing only an Admin Server and a WSM-PM Cluster, but some of the options, libraries and components shown in the screens could vary.

To extend the domain for Oracle Service Bus:

-

Change directory to the location of the Configuration Wizard. This is within the Oracle Service Bus directory. (All database instances should be up.)

cd ORACLE_COMMON_HOME/common/bin -

Start the Configuration Wizard.

./config.sh

-

In the Welcome screen, select Extend an existing WebLogic domain, and click Next.

-

In the WebLogic Domain Directory screen, select the WebLogic domain directory:

ORACLE_BASE/admin/domain_name/aserver/domain_name

Click Next.

-

In the Select Extension Source screen, select Extend my domain automatically to support the following added products and select the following products (the components required by Oracle SOA and Oracle WSM Policy Manager should already be selected and grayed out):

-

Oracle Service Bus OWSM Extension - 11.1.1.6 [osb]

-

Oracle Service Bus - 11.1.1.0 [osb]

-

WebLogic Advance Web Services JAX-RPC Extension

-

-

In the Configure JDBC Components Schema screen, do the following:

-

Select the select the OSB JMS reporting Provider schema.

-

For the Oracle RAC configuration for component schemas, select Convert to GridLink

Click Next. The Configure Gridlink RAC Component Schema screen appears.

-

-

In the Configure Gridlink RAC Component Schema screen accept the values for the data sources that are already present in the domain and click Next.

-

In the Test JDBC Component Schema screen, verify that the Oracle Service Bus JMS reporting datasources are correctly verified and click Next.

-

In the Select Optional Configuration screen, select the following:

-

JMS Distributed Destinations

-

Managed Servers, Clusters, and Machines

-

Deployments and Services

-

JMS File Store

Click Next.

-

-

In the Select JMS Distributed Destination Type screen leave the pre-existing JMS System Resources as they are and Select UDD from the drop down list for WseeJMSMOdule and JmsResources.

Click Next.

-

In the Configure Managed Servers screen, add the required managed servers for Oracle Service Bus.

-

Select the automatically created server and click Rename to change the name to WLS_OSB1.

-

Click Add to add another new server and enter WLS_OSB2 as the server name.

-

Give servers WLS_OSB1 and WLS_OSB2 the attributes listed in Table 11-2.

In the end, the list of managed servers should match Table 11-2.

Click Next.

-

-

In the Configure Clusters screen, add the Oracle Service Bus cluster (leave the present cluster as they are):

Name Cluster Messaging Mode Multicast Address Multicast Port Cluster Address SOA_Cluster(*)

unicast

n/a

n/a

SOAHOST1VHN1:8001, SOAHOST2VHN1:8001

WSM-PM_Cluster

unicast

n/a

n/a

Leave it empty.

OSB_Cluster

unicast

n/a

n/a

SOAHOST1VHN2:8011, SOAHOST2VHN2:8011

(*) - if you are extending a SOA domain

Click Next.

Note:

For asynch request/response interactions over direct binding, the SOA composites must provide their jndi provider URL for the invoked service to look up the beans for callback.

If soa-infra configuration properties are not specified, but the WebLogic Server Cluster address is specified, the cluster address from the JNDI provider URL is used. This cluster address can be a single DNS name which maps to the clustered servers' IP addresses or a comma separated list of server ip:port. Alternatively, the soa-infra configuration property

JndiProviderURL/SecureJndiProviderURLcan be used for the same purpose if explicitly set by users. -

In the Assign Servers to Clusters screen, assign servers to clusters as follows:

-

SOA_Cluster - If you are extending a SOA domain.

-

WLS_SOA1

-

WLS_SOA2

-

-

WSM-PM_Cluster:

-

WLS_WSM1

-

WLS_WSM2

-

-

OSB_Cluster:

-

WLS_OSB1

-

WLS_OSB2

Click Next.

-

-

-

Confirm that the following entries appear:

Name Node Manager Listen Address SOAHOST1

SOAHOST1

SOAHOST2

SOAHOST2

ADMINHOST

localhost

Leave all other fields to their default values.

Click Next.

-

In the Assign Servers to Machines screen, assign servers to machines as follows:

-

ADMINHOST:

-

AdminServer

-

-

SOAHOST1

-

WLS_SOA1 (if extending a SOA domain)

-

WLS_WSM1

-

WLS_OSB1

-

-

SOAHOST2:

-

WLS_SOA2 (if extending a SOA domain)

-

WLS_WSM2

-

WLS_OSB2

Click Next.

-

-

-

In the Target Deployments to Clusters or Servers screen, ensure the following targets:

-

Target usermessagingserver and usermessagingdriver-email only to SOA_Cluster. (The usermessaging-xmpp, usermessaging-smpp, and usermessaging-voicexml applications are optional.)

-

Target the oracle.sdp.*, and oracle.soa.* libraries only to SOA_Cluster.

-

Target the oracle.rules.* library only to AdminServer and SOA_Cluster.

-

Target the wsm-pm application only to WSM-PM_Cluster.

-

Target all Transport Provider Deployments to both the OSB_Cluster and the AdminServer.

Click Next.

For information on targeting applications and resources, see Appendix B, "Targeting Applications and Resources to Servers."

-

-

In the Target Services to Clusters or Servers screen:

-

Target mds-owsm only to WSM-PM_Cluster and AdminServer.

-

Target mds-soa only to SOA_Cluster.

Click Next.

-

-

In the Configure JMS File Stores screen, enter the shared directory location specified for your JMS stores as recommended in Section 4.3, "About Recommended Locations for the Different Directories." For example:

ORACLE_BASE/admin/domain_name/soa_cluster_name/jms

Select Direct-write policy for all stores.

Click Next.

-

In the Configuration Summary screen click Extend.

-

In the Extending Domain screen, click Done.

-

Restart the Administration Server for this configuration to take effect.

11.4 Disabling Host Name Verification for the WLS_OSBn Managed Servers

For the enterprise deployment described in this guide, you set up the appropriate certificates to authenticate the different nodes with the Administration Server after you have completed the procedures to extend the domain for Oracle SOA Suite. You must disable the host name verification for the WLS_OSB1 and WLS_OSB2 managed servers to avoid errors when managing the different WebLogic Server instances. For more information, see Section 8.4.8, "Disabling Host Name Verification."

You enable host name verification again once the enterprise deployment topology configuration is complete. For more information, see Section 13.3, "Enabling Host Name Verification Certificates for Node Manager in SOAHOST1."

11.5 Configuring Oracle Coherence for the Oracle Service Bus Result Cache

By default, result caching uses multicast communication. Oracle recommends using unicast communication for the Oracle Service Bus result cache. Additionally, Oracle recommends separating port ranges for Coherence clusters used by different products. The ports for the Oracle Service Bus result cache Coherence cluster should be different from the Coherence cluster used for SOA.

To enable unicast for the Oracle Service Bus result cache Coherence infrastructure:

-

Log into Oracle WebLogic Server Administration Console. In the Change Center, click Lock & Edit.

-

In the Domain Structure window, expand the Environment node.

-

Click Servers.

-

Click the name of the server (represented as a hyperlink) in the Name column of the table. The settings page for the selected server appears.

-

Click the Server Start tab.

-

Enter the following into the Arguments field for WLS_OSB1 on a single line, with no carriage returns:

-DOSB.coherence.localhost=soahost1vhn2 -DOSB.coherence.localport=7890 -DOSB.coherence.wka1=soahost1vhn2 -DOSB.coherence.wka1.port=7890 -DOSB.coherence.wka2=soahost2vhn2 -DOSB.coherence.wka2.port=7890

For WLS_OSB2, enter the following on a single line, no carriage returns:

-DOSB.coherence.localhost=soahost2vhn2 -DOSB.coherence.localport=7890 -DOSB.coherence.wka1=soahost1vhn2 -DOSB.coherence.wka1.port=7890 -DOSB.coherence.wka2=soahost2vhn2 -DOSB.coherence.wka2.port=7890

Note:

There should be no breaks in lines between the different -D parameters. Do not copy or paste the text from above to your Administration Console's arguments text field. This may result in HTML tags being inserted in the Java arguments. The text should not contain other text characters than those included the example above.

-

Save and activate the changes. You must restart Oracle Service Bus servers for these changes take effect.

Note:

The Coherence cluster used for Oracle Service Bus' result cache is configured above using port 7890. This port can be changed by specifying a different port (for example, 8089) with the following startup parameters:

-Dtangosol.coherence.wkan.port -Dtangosol.coherence.localport

For more information about Coherence Clusters see the Oracle Coherence Developer's Guide.

-

Ensure that these variables are passed to the managed server correctly by checking the server's output log.

Failure of the Oracle Coherence framework can prevent the result caching from working.

11.6 Configuring a Default Persistence Store for Transaction Recovery

Each server has a transaction log that stores information about committed transactions that are coordinated by the server that may not have been completed. The WebLogic Server uses this transaction log for recovery from system crashes or network failures. To leverage the migration capability of the Transaction Recovery Service for the servers within a cluster, store the transaction log in a location accessible to a server and its backup servers.

Note:

The recommended location is a dual-ported SCSI disk or on a Storage Area Network (SAN).

To set the location for the default persistence stores:

-

Log into the Oracle WebLogic Server Administration Console.

-

In the Domain Structure window, expand the Environment node and then click the Servers node.

The Summary of Servers page appears.

-

Click the name of the server (represented as a hyperlink) in Name column of the table.

The settings page for the selected server appears and defaults to the Configuration tab.

-

Click the Services tab.

-

In the Default Store section of the page, enter the path to the folder where the default persistent stores will store its data files.

The directory structure of the path is as follows:

ORACLE_BASE/admin/domain_name/soa_cluster_name/tlogs -

Perform Steps 2-5 for both WLS_OSB1, and WLS_OSB2 servers.

-

Click Save and Active Changes.

Note:

To enable migration of the Transaction Recovery Service, specify a location on a persistent storage solution that is available to other servers in the cluster. Both WLS_OSB1 and WLS_OSB2 must be able to access this directory. This directory must also exist before you restart the servers.

11.7 Propagating the Domain Configuration to the Managed Server Directory in SOAHOST1 and to SOAHOST2

Oracle Service Bus requires some updates to the WebLogic Server start scripts. Propagate these changes using the pack and unpack commands.

Note:

If you configure server migration before unpacking (extending the domain to include OSB), the wlsifconfig script is overwritten and must be restored from the copy that the -overwrite option provides.

Create a backup copy of the managed server domain directory and the managed server applications directory.

To propagate the start scripts and classpath configuration from the Administration Server's domain directory to the managed server domain directory:

-

Run the pack command on SOAHOST1 to create a template pack:

cd ORACLE_COMMON_HOME/common/bin ./pack.sh -managed=true -domain=ORACLE_BASE/admin/ domain_name/aserver/domain_name -template=soadomaintemplateExtOSB.jar -template_name=soa_domain_templateExtOSB

-

Run the unpack command on SOAHOST1 to unpack the propagated template to the domain directory of the managed server:

./unpack.sh -domain=ORACLE_BASE/admin/domain_name/mserver/domain_name -overwrite_domain=true -template=soadomaintemplateExtOSB.jar -app_dir=ORACLE_BASE/admin/domain_name/mserver/applications

Note:

The

-overwrite_domainoption in the unpack command, allows unpacking a managed server template into an existing domain and existing applications directories. For any file that is overwritten, a backup copy of the original is created. If any modifications had been applied to the start scripts and ear files in the managed server domain directory they must be restored after this unpack operation. -

Copy the template to SOAHOST2 run the following commands on SOAHOST1:

cd ORACLE_COMMON_HOME/common/bin scp soadomaintemplateExtOSB.jar oracle@SOAHOST2:/ ORACLE_COMMON_HOME/common/bin

-

Run the unpack command on SOAHOST2 to unpack the propagated template:

cd ORACLE_COMMON_HOME/common/bin ./unpack.sh -domain=ORACLE_BASE/admin/domain_name/mserver/domain_name/ -overwrite_domain=true -template=soadomaintemplateExtOSB.jar -app_dir=ORACLE_BASE/admin/ domain_name/mserver/applications

Note:

The configuration steps provided in this enterprise deployment topology are documented with the assumption that a local (per node) domain directory is used for each managed server.

11.8 Starting the Oracle Service Bus Servers

Since Oracle Service Bus servers extend an already existing domain it is assumed that the Administration Server and respective Node Managers are already running in SOAHOST1 and SOAHOST2.

To start the added the WLS_OSB servers:

-

Log into the Oracle WebLogic Server Administration Console at:

http://ADMINVHN:7001/console

-

In the Domain Structure window, expand the Environment node, then select Servers.

The Summary of Servers page appears.

-

Click the Control tab.

-

Select WLS_OSB1 from the Servers column of the table.

-

Click Start. Wait for the server to come up and check that its status is reported as RUNNING in the Administration Console.

-

Repeat steps 2 through 5 for WLS_OSB2.

11.9 Validating the WLS_OSB Managed Servers

Validate the WLS_OSB managed servers using the Oracle WebLogic Server Administration Console and by accessing URLs.

To validate the WLS_OSB managed server:

-

Verify that the server status is reported as Running in the Admin Console. If the server is shown as Starting or Resuming, wait for the server status to change to Started. If another status is reported (such as Admin or Failed), check the server output log files for errors. See Section 16.14, "Troubleshooting the Topology in an Enterprise Deployment" for possible causes.

-

Access the following URL to verify status of WLS_OSB1:

http://SOAHOST1VHN2:8011/sbinspection.wsil

With the default installation, this should be the HTTP response:

-

Access the following URL:

http://SOAHOST1VHN2:8011/alsb/ws/_async/AsyncResponseServiceJms?WSDL

With the default installation, this should be the HTTP response:

-

Access the equivalent URLs for:

http://SOAHOST2VHN2:8011/

-

Verify also the correct deployment of the Oracle Service Bus console to the Administration Server by accessing the following URL:

http://ADMINHOSTVHN:7001/sbconsole/

The Oracle Service Bus console should appear with no errors.

11.10 Configuring Oracle HTTP Server for the WLS_OSBn Managed Servers

To enable Oracle HTTP Server to route to Oracle Service Bus console and Oracle Service Bus service you must set the WebLogicCluster parameter to the list of nodes in the cluster.

If you use a virtual server for administration purposes, the /sbconsole defines routing in the context of the virtual server in the admin_vh.conf file. Similarly, add the rest of the Oracle Service Bus URLs to the osb_vh.conf file.

Start the context paths for the HTTP proxy services with a common name, such as /osb/project-name/folder-name/proxy-service-name to facilitate the routing in Oracle HTTP Server for all the proxy services.

To set the parameter:

-

On WEBHOST1 and WEBHOST2, add directives to the

osb_vh.conffile located in the following directory:ORACLE_BASE/admin/instance_name/config/OHS/component_name/moduleconf

Note that this assumes you created the

osb_vh.conffile using the instructions in Section 7.6, "Defining Virtual Hosts."Add the following directives inside the

<VirtualHost>tags:<Location /sbinspection.wsil > SetHandler weblogic-handler WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011 WLProxySSL ON WLProxySSLPassThrough ON </Location> <Location /sbresource > SetHandler weblogic-handler WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011 WLProxySSL ON WLProxySSLPassThrough ON </Location> <Location /osb > SetHandler weblogic-handler WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011 WLProxySSL ON WLProxySSLPassThrough ON </Location> <Location /alsb > SetHandler weblogic-handler WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011 WLProxySSL ON WLProxySSLPassThrough ON </Location>The

osb_vh.conffile will appear as it does in Example 11-1. -

Add the following entry to the

admin_vh.conffile within the<VirtualHost>tags:<Location /sbconsole > SetHandler weblogic-handler WebLogicHost ADMINVHN WeblogicPort 7001 </Location>The

admin_vh.conffile will appear as it does in Example 11-2. -

Restart Oracle HTTP Server on WEBHOST1 and WEBHOST2:

WEBHOST1> ORACLE_BASE/admin/instance_name/bin/opmnctl restartproc ias-component=ohs1 WEBHOST2> ORACLE_BASE/admin/instance_name/bin/opmnctl restartproc ias-component=ohs2

<VirtualHost *:7777>

ServerName https://osb.mycompany.com:443

ServerAdmin [email protected]

RewriteEngine On

RewriteOptions inherit

<Location /sbinspection.wsil >

SetHandler weblogic-handler

WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011

WLProxySSL ON

WLProxySSLPassThrough ON

</Location>

<Location /sbresource >

SetHandler weblogic-handler

WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011

WLProxySSL ON

WLProxySSLPassThrough ON

</Location>

<Location /osb >

SetHandler weblogic-handler

WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011

WLProxySSL ON

WLProxySSLPassThrough ON

</Location>

<Location /alsb >

SetHandler weblogic-handler

WebLogicCluster SOAHOST1VHN2:8011,SOAHOST2VHN2:8011

WLProxySSL ON

WLProxySSLPassThrough ON

</Location>

</VirtualHost>

Example 11-2 admin_vh.conf file

# The admin URLs should only be accessible via the admin virtual host

<VirtualHost *:7777>

ServerName admin.mycompany.com:80

ServerAdmin [email protected]

RewriteEngine On

RewriteOptions inherit

# Admin Server and EM

<Location /console>

SetHandler weblogic-handler

WebLogicHost ADMINVHN

WeblogicPort 7001

</Location>

<Location /consolehelp>

SetHandler weblogic-handler

WebLogicHost ADMINVHN

WeblogicPort 7001

</Location>

<Location /em>

SetHandler weblogic-handler

WebLogicHost ADMINVHN

WeblogicPort 7001

</Location>

<Location /sbconsole >

SetHandler weblogic-handler

WebLogicHost ADMINVHN

WeblogicPort 7001

</Location>

</VirtualHost>

11.11 Setting the Front End HTTP Host and Port for OSB_Cluster

Set the front end HTTP host and port for Oracle WebLogic Server cluster using the WebLogic Server Administration Console.

To set the front end host and port:

-

In the WebLogic Server Administration Console, in the Change Center section, click Lock & Edit.

-

In the left pane, select Environment and then Clusters.

-

Select the OSB_Cluster.

-

Select HTTP.

-

Set the values for the following:

-

Frontend Host: osb.mycompany.com

-

Frontend HTTP Port: 80

-

Frontend HTTPS Port: 443

Note:

Make sure this address is correct and available (the load balancing router is up). An incorrect value, for example, http:// in the address, or trailing / in the hostname, may prevent the SOA system from being accessible even when using the virtual IPs to access it.

Click Save.

-

-

To activate the changes, click Activate Changes in the Change Center section of the Administration Console.

11.12 Validating Access Through Oracle HTTP Server

Since you have already set the cluster address for the OSB_Cluster, the Oracle Service Bus URLs can only be verified once Oracle HTTP Server has been configured to route the Oracle Service Bus context URLs to the WebLogic Servers. Verify the URLs to ensure that appropriate routing and failover is working from the HTTP Server to the Oracle Service Bus components.

For information on configuring system access through the load balancer, see Section 3.3, "Configuring the Load Balancers."

To verify the URLs:

-

While WLS_OSB1 is running, stop WLS_OSB2 using the Oracle WebLogic Server Administration Console.

-

Access WebHost1:7777/sbinspection.wsil and verify the HTTP response as indicated in Section 11.9, "Validating the WLS_OSB Managed Servers."

-

Start WLS_OSB2 from the Oracle WebLogic Server Administration Console.

-

Stop WLS_OSB1 from the Oracle WebLogic Server Administration Console.

-

Access WebHost1:7777/sbinspection.wsil and verify the HTTP response as indicated in section Section 11.9, "Validating the WLS_OSB Managed Servers."

Note:

Since a front end URL has been set for the OSB_Cluster, the requests to the urls result in a re-route to the LBR, but in all cases it should suffice to verify the appropriate mount points and correct failover in Oracle HTTP Server.

-

Verify this URLs using your load balancer address:

http://osb.mycompany.com:80/sbinspection.wsil

11.13 Enabling High Availability for Oracle DB, File and FTP Adapters

Oracle SOA Suite and Oracle Service Bus use the same database and File and FTP JCA adapters. You create the required database schemas for these adapters when you use the Oracle Repository Creation Utility for SOA. The required configuration for the adapters is described in section Section 9.8.1, "Enabling High Availability for Oracle File and FTP Adapters." The DB adapter does not require any configuration at the WebLogic Server resource level. If you are configuring Oracle Service Bus as an extension of a SOA domain, you do not need to add to the configuration already performed for the adapters.

If you are deploying Oracle Service Bus as an extension to a WSM-PM and Admin Server domain, do the following:

-

Run RCU to seed the Oracle Service Bus database with the required adapter schemas (Select SOA Infrastructure, and SOA and BAM Infrastructure in RCU).

-

Perform the steps in, and the steps reflected in Section 9.8.1, "Enabling High Availability for Oracle File and FTP Adapters."

11.14 Configuring Server Migration for the WLS_OSB Servers

The high availability architecture for an Oracle Service Bus system uses server migration to protect some singleton services against failures. For more information on whole server migration, see Oracle Fusion Middleware Using Clusters for Oracle WebLogic Server.

The WLS_OSB1 managed server is configured to be restarted on SOAHOST2 in case of failure, and the WLS_OSB2 managed server is configured to be restarted on SOAHOST1 in case of failure. For this configuration the WLS_OSB1 and WLS_OSB2 servers listen on specific floating IPs that are failed over by WLS Server Migration.

Table Table 11-5 lists the high-level steps for configuring server migration for the WLS_OSB server.

Table 11-5 Steps for Configuring Server Migration for the WLS_OSB Servers

| Step | Description | More Information |

|---|---|---|

|

Set Up the User and Tablespace for the Server Migration Leasing Table |

Set Up the User and Tablespace for the Server Migration Leasing Table. If a tablespace has already been set up for SOA, this step is not required. |

Section 11.14.1, "Setting Up the User and Tablespace for the Server Migration Leasing Table" |

|

Edit the Node Manager's Properties File |

Edit the Node Manager properties file on the two nodes where the servers are running. |

Section 11.14.2, "Editing the Node Manager's Properties File" |

|

Set Environment and Superuser Privileges for the wlsifconfig.sh Script |

Set the environment and superuser privileges for the wlsifconfig.sh script. |

Section 11.14.3, "Setting Environment and Superuser Privileges for the wlsifconfig.sh Script" |

|

Configure Server Migration Targets |

Configure cluster migration targets, set the |

|

|

Validate Server Migration |

Verify that Server Migration is working properly. |

11.14.1 Setting Up the User and Tablespace for the Server Migration Leasing Table

Set up the User and tablespace for the server migration table using SQL*Plus.

To create the user and tablespace:

-

Create a tablespace called leasing. For example, log on to SQL*Plus as the sysdba user and run the following command:

SQL> create tablespace leasing logging datafile 'DB_HOME/oradata/orcl/leasing.dbf' size 32m autoextend on next 32m maxsize 2048m extent management local; -

Create a user named leasing and assign it to the leasing tablespace as follows:

SQL> create user leasing identified by password; SQL> grant create table to leasing; SQL> grant create session to leasing; SQL> alter user leasing default tablespace leasing; SQL> alter user leasing quota unlimited on LEASING; -

Create the leasing table using the

leasing.ddlscript as follows:-

Copy the leasing.ddl file located in the following directory to your database node:

WL_HOME/server/db/oracle/920 -

Connect to the database as the leasing user.

-

Run the

leasing.ddlscript in SQL*Plus as follows:SQL> @copy_location/leasing.ddl;

-

11.14.2 Editing the Node Manager's Properties File

Edit the Node Manager properties file on the two nodes where the servers are running. The nodemanager.properties file is located in the following directory:

WL_HOME/common/nodemanager

Add the following properties to enable server migration to work properly:

-

InterfaceInterface=eth0

This property specifies the interface name for the floating IP (

eth0, for example).Note:

Do not specify the sub interface, such as

eth0:1oreth0:2. This interface is to be used without the:0, or:1. The Node Manager's scripts traverse the different:Xenabled IPs to determine which to add or remove. For example, the valid values in Linux environments areeth0,eth1, or,eth2,eth3,ethn, depending on the number of interfaces configured. -

NetMaskNetMask=255.255.255.0

This property specifies the net mask for the interface for the floating IP.

-

UseMACBroadcastUseMACBroadcast=true

This property specifies whether or not to use a node's MAC address when sending ARP packets, that is, whether or not to use the

-bflag in the arping command.

Verify in the output of Node Manager (the shell where the Node Manager is started) that these properties are in use. Otherwise, problems may occur during migration. The output should be similar to the following:

... StateCheckInterval=500 Interface=eth0 (Linux) or Interface="Local Area Connection" (Windows) NetMask=255.255.255.0 UseMACBroadcast=true ...

11.14.3 Setting Environment and Superuser Privileges for the wlsifconfig.sh Script

Set the environment and superuser privileges for the wlsifconfig.sh script.

To set the environment and superuser privileges:

-

Ensure that the PATH environment variable includes the files listed in Table 11-6.

-

Grant sudo privilege to the WebLogic user ('oracle') with no password restriction, and grant execute privilege on the /sbin/ifconfig and /sbin/arping binaries.

For security reasons, sudo should be restricted to the subset of commands required to run the

wlsifconfig.shscript, for example, to set the environment and superuser privileges for thewlsifconfig.shscript.On Windows, the script is named

wlsifconfig.cmdand it can be run with the administrator privilege.Note:

Ask the system administrator for the sudo and system rights as appropriate to this step.

-

Make sure the script is executable by the WebLogic user ('oracle'). The following is an example of an entry inside /etc/sudoers granting sudo execution privilege for

oracleand also overifconfigandarping.To grant sudo privilege to the WebLogic user ('oracle') with no password restriction, and grant execute privilege on the /sbin/ifconfig and /sbin/arping binaries:

Defaults:oracle !requiretty oracle ALL=NOPASSWD: /sbin/ifconfig,/sbin/arping

11.14.4 Configuring Server Migration Targets

Configure cluster migration by setting the DataSourceForAutomaticMigration property to true.

To configure migration targets in a cluster:

-

Log into the Oracle WebLogic Server Administration Console.

-

In the Domain Structure window, expand Environment and select Clusters.

The Summary of Clusters page appears.

-

Click the cluster for which you want to configure migration (OSB_Cluster) in the Name column of the table.

-

Click the Migration tab.

-

In the Change Center Click Lock & Edit.

-

In the Available field, select the machine to which to allow migration and click the right arrow. In this case, select SOAHOST1 and SOAHOST2.

-

Select the data source to be used for automatic migration. In this case select the leasing data source and click Save.

-

Click Activate Changes.

-

Set the candidate machines for server migration for WLS_OSB1 and WLS_OSB2:

Set the candidate machines for server migration for WLS_OSB1 only. WLS_OSB2 does not use server migration:" need to be reformulate. We need configure for both OSB1 and OSB2, because OSB servers are identical. We used this note for BAM, because BAM1 is not identical with BAM2

-

In the Domain Structure window of the Oracle WebLogic Server Administration Console, expand Environment and select Servers.

-

Select the server for which you want to configure migration.

-

Click the Migration tab.

-

In the Available field, located in the Migration Configuration section, select the machines to which to allow migration and click the right arrow. Select SOAHOST2 for WLS_OSB1. Select SOAHOST1 for WLS_OSB2.

-

Select Automatic Server Migration Enabled and click Save.

This enables the Node Manager to start a failed server on the target node automatically.

-

Click Activate Changes.

-

Restart the Administration Server and the WLS_OSB1 server.

To restart the Administration Server, use the procedure in Section 8.4.3, "Starting the Administration Server on SOAHOST1."

For information on targeting applications and resources, see Appendix B, "Targeting Applications and Resources to Servers."

-

11.14.5 Validating Server Migration

To validate that Server Migration is working properly:

To test from Node 1:

-

Force stop the WLS_OSB1 managed server.

kill -9 pidwhere pid specifies the process ID of the managed server. You can identify the pid in the node by running this command:

ps -ef | grep WLS_OSB1

Note:

For Windows, the Managed Server can be terminated by using the taskkill command. For example:

taskkill /f /pid pidWhere pid is the process Id of the managed server.

To determine the process Id of the WLS_OSB1 Managed Server:

MW_HOME\jrockit_160_20_D1.0.1-2124\bin\jps -l -v -

Watch the Node Manager console: you should see a message indicating that WLS_OSB1's floating IP has been disabled.

-

Wait for the Node Manager to try a second restart of WLS_OSB1. Node Manager waits for a fence period of 30 seconds before trying this restart.

-

Once Node Manager restarts the server, stop it again. Now Node Manager should log a message indicating that the server will not be restarted again locally.

To test from Node 2:

-

Watch the local Node Manager console. After 30 seconds since the last try to restart WLS_OSB1on Node 1, Node Manager on Node 2 should prompt that the floating IP for WLS_OSB1 is being brought up and that the server is being restarted in this node.

-

Access the

sbinspection.wsil urlconsolein the same IP.

To validate migration from the WebLogic Server Administration Console:

-

Log into the Administration Console.

-

Click on Domain on the left console.

-

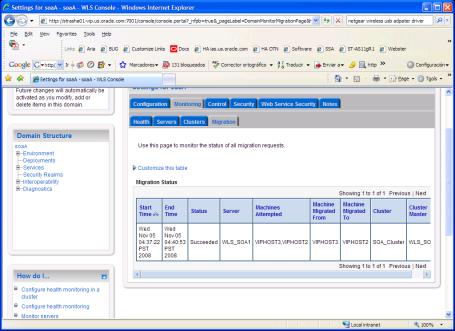

Click the Monitoring tab and then on the Migration tab.

The Migration Status table provides information on the status of the migration.

Figure 11-3 Migration Status Screen in the Administration Console

Description of "Figure 11-3 Migration Status Screen in the Administration Console"

Note:

After a server is migrated, to fail it back to its original node, stop the managed server from the Oracle WebLogic Administration Console and then start it again. The appropriate Node Manager starts the managed server on the machine to which it was originally assigned.

11.15 Backing Up the Oracle Service Bus Configuration

After you have verified that the extended domain is working, back up the domain configuration. This is a quick backup for the express purpose of immediate restore in case of failures in future procedures. Back up the configuration to the local disk. This backup can be discarded once you have completed the enterprise deployment. Once you have completed the enterprise deployment, you can initiate the regular deployment-specific backup and recovery process.

For information about backing up the environment, see "Backing Up Your Environment" in the Oracle Fusion Middleware Administrator's Guide. For information about recovering your information, see "Recovering Your Environment" in the Oracle Fusion Middleware Administrator's Guide.

To back up the domain configuration:

-

Back up the Web tier:

-

Shut down the instance using

opmnctl.ORACLE_BASE/admin/instance_name/bin/opmnctl stopall

-

Back up the Middleware Home on the web tier using the following command (as root):

tar -cvpf BACKUP_LOCATION/web.tar MW_HOME

-

Back up the Instance Home on the web tier using the following command (as root):

tar -cvpf BACKUP_LOCATION/web_instance.tar ORACLE_INSTANCE

-

Start the instance using

opmnctl:ORACLE_BASE/admin/instance_name/bin/opmnctl startall

-

-

Back up the database. This is a full database backup (either hot or cold) using Oracle Recovery Manager (recommended) or OS tools such as

tarfor cold backups if possible. -

Back up the Administration Server domain directory to save your domain configuration. The configuration files are located in the following directory:

ORACLE_BASE/admin/domain_name

To back up the Administration Server:

tar -cvpf edgdomainback.tar ORACLE_BASE/admin/domain_name