1 Oracle Enterprise Data Quality Applications Help Topics

The following sections describe the help available for the applications in Oracle Enterprise Data Quality:

1.1 Welcome to EDQ

Thank you for choosing Oracle Enterprise Data Quality (EDQ).

EDQ is a state-of-the-art collaborative data quality profiling, analysis, parsing, standardization, matching and merging product, designed to help you understand, improve, protect, and govern the quality of the information your business uses, all from a single integrated environment.

These help pages are designed to get you started quickly with EDQ, and to provide a useful reference when using the product.

1.1.1 Installing EDQ

EDQ should only be installed on machines where you want to run processes. Client machines do not require a server installation. Clients that have a supported Java Runtime Environment (JRE) installed can connect to an EDQ Server using a supported web browser. EDQ uses Java Web Start to download and start the client applications on the client machine.

See Installing and Configuring Oracle Enterprise Data Quality in the EDQ Release 12.2.1 document library.

1.1.2 Key Features

The following are the key features of EDQ:

-

Integrated data profiling, auditing, cleansing and matching

-

Browser-based client access

-

Ability to handle all types of data (for example, customer, product, asset, financial, and operational)

-

Connection to any Java Database Connectivity (JDBC) compliant data sources and targets

-

Multi-user project support (role-based access, issue tracking, process annotation, and version control)

-

Services Oriented Architecture (SOA) - support for designing processes that may be exposed to external applications as a service

-

Designed to process large data volumes

-

A single repository to hold data along with gathered statistics and project tracking information, with shared access

-

Intuitive graphical user interface designed to help you solve real-world information quality issues quickly

-

Easy, data-led creation and extension of validation and transformation rules

-

Fully extensible architecture allowing the insertion of any required custom processing

1.1.3 Contacting Oracle Support

The Oracle Technology Network offers a huge range of resources on Oracle software.

-

Discuss technical problems and solutions on the Discussion Forums.

-

Get hands-on step-by-step tutorials with Oracle By Example.

-

Download Sample Code.

-

Get the latest news and information on any Oracle product.

-

Access the Oracle Learning Library for free training videos and resources.

You can also get further help and information with Oracle software from:

-

My Oracle Support (requires registration)

-

Oracle Support Services

1.1.4 EDQ Language Options

All EDQ applications are provided with the following UI language options:

-

US English

-

French

-

Italian

-

German

-

Spanish

-

Chinese

-

Japanese

-

Korean

-

Brazilian Portuguese

The translations for all languages are automatically installed with EDQ and a single server supports clients in different languages.

Note:

-

EDQ is fully Unicode compliant and therefore can process data in any language. These language options are solely for controlling the UI text.

-

The locale settings of the client set the UI language. See Adjusting the Client Locale below for further details.

-

The online help and technical documentation of the product are currently provided in US English only.

-

User-specified names of configuration objects (such as projects, processes, reference data and so on) are not currently translatable. This also means that configuration object names used in pre-packaged extensions (such as the Customer Data Services Pack and Oracle Watchlist Screening), are in US English only.

1.1.4.1 Adjusting the Client Locale

A client machine will display the EDQ UIs in the local language according to the machine's display settings.

To adjust the language of the client in order to change the language of the UIs, use the following procedure:

-

Set the web browser's language display options to display web pages from the EDQ server in the chosen language.

-

Change the regional settings of the client machine in order to display the EDQ Java WebStart UIs in the chosen language; for example, on Windows machines change the System Locale, Format, and Display Language.

Notes:

-

Following the Java 7 Update 25, the Display Language now must be adjusted in order to modify the language in which Java applications are displayed. In previous versions, only the System Locale and Format were used. If you are using Windows, only Windows Enterprise and Windows Ultimate include the Multilingual User Interface Pack required to alter the Display Language from its installed setting.

-

For testing purposes, it is possible to override the client settings using a server option that sets the locale for all clients. To do this, add the following setting to [edq_local_home]/properties/clientstartup.properties:

locale = [ISO 639-2 Language Code]. For example, to make all client Java UIs appear in Japanese regardless of the client's regional settings, add this line:locale = ja

-

1.1.5 Terms of Use

This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited.

The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing.

If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. No other rights are granted to the U.S. Government.

This software or hardware is developed for general use in a variety of information management applications. It is not developed or intended for use in any inherently dangerous applications, including applications that may create a risk of personal injury. If you use this software or hardware in dangerous applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and other measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any damages caused by use of this software or hardware in dangerous applications.

Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

Intel and Intel Xeon are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. AMD, Opteron, the AMD logo, and the AMD Opteron logo are trademarks or registered trademarks of Advanced Micro Devices. UNIX is a registered trademark of The Open Group.

This software or hardware and documentation may provide access to or information about content, products, and services from third parties. Oracle Corporation and its affiliates are not responsible for and expressly disclaim all warranties of any kind with respect to third-party content, products, and services unless otherwise set forth in an applicable agreement between you and Oracle. Oracle Corporation and its affiliates will not be responsible for any loss, costs, or damages incurred due to your access to or use of third-party content, products, or services, except as set forth in an applicable agreement between you and Oracle.

1.2 EDQ Applications

The Oracle Enterprise Data Quality suite includes these applications:

1.2.1 Director

Director is the core application of the Oracle Enterprise Data Quality suite.

The Director user interface consists of the following on-screen elements.

1.2.1.1 Getting Started with Director

To get started with Director, see "Getting Started" in Oracle Fusion Middleware Using Oracle Enterprise Data Quality.

1.2.1.2 Menu

The Menu contains four submenus, as detailed below:

| Element | Description |

|---|---|

|

New Process... |

Create a new process (Ctrl+N). |

|

New Project... |

Create a new project. |

|

New Server... |

Connect to another OEDQ server. |

|

Open Package File |

Open a package file. |

|

Close |

Close the current selected process. |

|

Close All |

Close all open processes. |

|

Save |

Save the current selected process (Ctrl+S). |

|

Save All |

Save all open processes (Ctrl+Shift+S). |

|

|

Print the current canvas (Ctrl+P). |

|

Exit |

Exit OEDQ. |

| Element | Description |

|---|---|

|

Undo |

Undoes the last action on the canvas (Ctrl+Z). |

|

Redo |

Redoes the last action on the canvas (Ctrl+Y). |

|

Cut |

Cuts the selected processor(s) (Ctrl+X). |

|

Copy |

Copies the selected processor(s) (Ctrl+C). |

|

Paste |

Pastes the selected processor(s) (Ctrl+V). |

|

Delete |

Deletes the selected processor(s) (Delete). |

|

Rename |

Renames the selected object (F2). |

|

Select All |

Selects all items in the active pane (Ctrl+A). |

|

Preferences... |

Sets preferences for Processor Progress Reporting, Exporting Results to Excel and the Canvas. |

| Element | Description |

|---|---|

|

Zoom In |

Zooms in on the canvas. |

|

Zoom Out |

Zooms out on the canvas. |

|

Project Browser |

Shows or hides the Project Browser. |

|

Tool Palette |

Shows or hides the Tool Palette. |

|

Results Browser |

Shows or hides the Results Browser. |

|

Task Progress |

Shows or hides the Task Window. |

|

Canvas Overview |

Shows or hides the Canvas Overview. |

|

Server Console |

Opens the Server Console application. |

|

Configuration Analysis |

Opens the Configuration Analysis application. |

|

Web Service Tester |

Opens the Web Service Tester application. |

|

Scheduled Jobs |

Displays scheduled jobs on the server. |

|

Event Log |

Displays the Event Log. |

1.2.1.3 Toolbar

The toolbar provides easy access to a number of common functions in EDQ. The icons on the toolbar represent these common functions.

Tooltips are available so that when you hover over the icon on the toolbar, a brief description of its function is displayed.

1.2.1.4 Issue Notifier

The Issue Notifier shows how many open issues are currently assigned to you on the server to which you are connected.

Click on the Issue Notifier to launch the Issue Manager and display the details of the issues assigned to you.

1.2.1.5 Project Browser

The Project Browser allows you to browse an EDQ server, and the projects contained in it.

-

Projects

-

Reference Data

-

Data Stores

-

Published Processors

Note that you can copy most items in the Project Browser to new projects, or other EDQ servers, by dragging and dropping the item, or by using Copy (Ctrl+C) and Paste (Ctrl+V).

Right-click on a blank area of the Project Browser to connect to a new EDQ server, or to open a package file.

Objects in the Project Browser may appear with different overlay icons depending on their state. A green 'Play' icon indicates the object is running, an amber 'Stop' icon indicates that execution of the object was canceled, and a red 'Warning' icon indicates that execution of the object resulted in an error.

These states are:

-

Normal

-

Running

-

Canceled

-

Errored

There are two types of lock. A downwards-pointing red triangle indicates that the object is locked and cannot be opened (most likely because it is open for editing by another user). A blue dot indicates that the object is read-only (most likely because it is currently being used in a job and cannot be edited in order to preserve the logic of the job).

The lock states are:

-

Locked (the object cannot be viewed)

-

Read-Only (the object can be viewed, but not edited)

1.2.1.6 Canvas

The Canvas is where processes are opened, and therefore where data quality processes are designed using EDQ.

Many processes may be open on the Canvas at any one time.

You may close an individual process by selecting Right-click, Close, or by clicking on the Close process icon in the top-right of the canvas:The Canvas has its own toolbar for Canvas-specific actions.

Note that most of the main Toolbar functions can also be used for the Canvas.

Note that the process on the canvas may appear differently depending on its state. See Process states.

The processors in a process also change their appearance depending on their state. See Processor states.

If a process is open on the canvas when it is executed, you can view the progress of each processor in the process, and monitor the overall progress of the process. Otherwise, you can monitor its progress in the Task Window.

1.2.1.7 Tool Palette

The Tool Palette provides a list of all the available processors that you may use when working on projects in EDQ.

The processors are listed by their Processor family.

To use a processor in the definition of a process, drag it from the Tool Palette and drop it onto an open process.

You can then connect a processor's inputs and configure it for use in your process.You can also find a processor by searching for it using the search box at the bottom of the tool palette. This will quickly search for processors using the entered text. For example, to find all processors with a name containing the word 'length', simply enter 'length' in the search box:

1.2.1.8 Canvas Overview Pane

The overview pane is used to aid navigation around large processes, which cannot be viewed in their entirety on the Canvas.

The overview pane displays a thumbnail view of the whole process, with a rectangular overlay that shows the area currently visible on the canvas.

You can move around the process rapidly by dragging the rectangle to the area you want to examine. The Canvas will automatically move to the framed area.

1.2.1.9 Task Window

The Task Window allows you to see the progress of all currently running tasks, including jobs, processes and snapshots, on all connected servers. Tasks are grouped by server, and overlays are used to show the state of the task.

Jobs in the task window can be expanded to show more detailed information about the phases and processes involved in that job.

Right-clicking on a task displays a context-sensitive menu containing options available to the selected task.

Running tasks can be canceled. Tasks which have errored can be opened or edited. Errors can be displayed, or cleared. Selecting the Clear All Errors option removes all errored tasks from the task window.

1.2.1.10 Results Browser

The Results Browser is designed to help you to understand your data by providing an interactive way of browsing the results of your processing using EDQ. Provided you are using the repository on process execution, you can drill down on the statistics in the Results Browser to useful views of the data that are designed to help you form business rules for validating and transforming it.

There are a number of general functions available in the Results Browser. These functions are accessible from the toolbar at the top of the Results Browser window.

1.2.1.11 System-level Reference Data Library

The following sets of System-level Reference Data are provided with EDQ.

Note:

Reference Data lists and maps that are shipped with EDQ are named with an asterisk before their name to differentiate them from Reference Data that you create.Many of these lists and maps are used by processors by default. You may change these (by using them in projects, modifying them, and copying them back to the System library), though it is advisable to create new lists and maps with different names for your own needs, so that they will not be overwritten when EDQ is upgraded.

Oracle also provides packs of Reference Data for specific types of data, and for solving specific problems - for example, lists of known telephone number prefixes, name and address lists, and regular expressions for checking structured data such as URLs. These packs are available as extension packs for EDQ.

| Reference Data Name | Purpose |

|---|---|

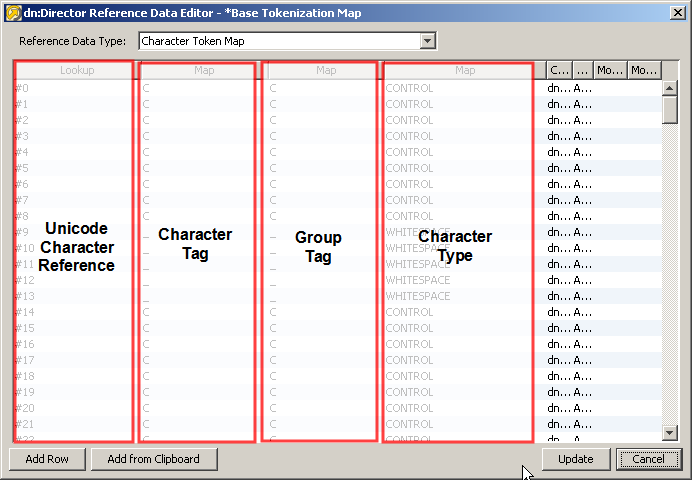

| *Base Tokenization Map | A reference data set used to tokenize data in the Parse processor, covering only a limited set of characters. Preserved for backward compatibility purposes. |

| *Character Pattern Map | A reference data set used to generate patterns in the Pattern processors, covering only a limited set of characters. Preserved for backward compatibility purposes. |

| *Date Formats | A list of standard formats for recognizing dates. |

| *Delimiters | A list of commonly used delimiters. |

| *Email Regex | A default regular expression used to check email addresses syntactically. |

| *No Data Handling | The standard EDQ set of No Data characters. |

| *Noise Characters | A list of common 'noise' characters. |

| *Number Bands | An example set of Number Bands, for the Number Profiler. |

| *Number Formats | A list of standard formats for recognizing numbers. |

| *Standardize Accented Characters | A character map used to standardize accented characters to their unaccented equivalents. |

| *UK Postcode Regex | A default regular expression used to check UK postcodes syntactically. |

| *Unicode Base Tokenization Map | The default reference data set used to tokenize data in the Parse processor, covering the entire Unicode range. |

| *Unicode Character Pattern Map | The default reference data set used to generate patterns in the pattern processors, covering the entire Unicode range. |

1.2.1.12 Run Profiles

Run Profiles are optional templates that specify a number of 'override' configuration settings for externalized options when a Job is run. They offer a convenient way of saving and reusing a number of configuration overrides, rather than specifying each override as a separate argument.

Run Profiles may be used when running jobs either from the Command Line Interface, using the runopsjob command, or in the Server Console UI.

Run Profiles can be created using any text editor. They must be saved with the .properties prefix to the oedq_local_home/runprofiles folder of the EDQ installation.

They are typically set up by an advanced user with knowledge of the ways configuration needs to be overridden in a production deployment. They may be created or edited directly by a user with access to the oedq_local_home directory, or transferred by an FTP task into the oedq_local_home/runprofiles directory. Solutions built using EDQ, such as Oracle Watchlist Screening, may include a number of pre-defined Run Profiles that are suitable for overriding externalized configuration options in pre-packaged jobs.

The template for creating Run Profiles is called template.properties, and can be found in the [Installpath]/oedq_local_home/run profiles directory. The template contains full instructions and examples for each type of override.

An example of a Run Profile file is included below.

######### Real-time Setup ########### # Globally turns on/off real-time screening phase.Start\ Real-time\ Screening.enabled = Y # Control single real-time screening types phase.Real-time\ Screening.process.Individual\ Real-time\ Screening.san_enabled = Y phase.Real-time\ Screening.process.Individual\ Real-time\ Screening.pep_enabled = Y phase.Real-time\ Screening.process.Individual\ Real-time\ Screening.edd_enabled = Y phase.Real-time\ Screening.process.Entity\ Real-time\ Screening.san_enabled = Y phase.Real-time\ Screening.process.Entity\ Real-time\ Screening.pep_enabled = Y phase.Real-time\ Screening.process.Entity\ Real-time\ Screening.edd_enabled = Y ########## Batch Setup ############## # Globally turns on/off batch screening phase.Start\ Batch\ Screening.enabled = Y # Control single batch screening types phase.Match\ Individuals\ Batch\ SAN.enabled = Y phase.Match\ Individuals\ Batch\ PEP.enabled = Y phase.Match\ Individuals\ Batch\ EDD.enabled = Y phase.Match\ Entities\ Batch\ SAN.enabled = Y phase.Match\ Entities\ Batch\ PEP.enabled = Y phase.Match\ Entities\ Batch\ EDD.enabled = Y ######## Screening Receipt ########## phase.Real-time\ Screening.process.Individual\ Real-time\ Screening.receipt_prefix = OWS phase.Real-time\ Screening.process.Individual\ Real-time\ Screening.receipt_suffix = IND phase.Real-time\ Screening.process.Entity\ Real-time\ Screening.receipt_prefix = OWS phase.Real-time\ Screening.process.Entity\ Real-time\ Screening.receipt_suffix = ENT

1.2.2 Server Console

Server Console is designed to be used by typical "Operations Users" in an organization; that is, those users who either do not require or should not have access to the full functionality to the Director UI.

The application can be connected to one or more EDQ servers. For further details, see Managing Server Connections.

Server Console is divided into the following functional areas:

-

Scheduler - Used to select jobs to run, either as prompted or according to a schedule.

-

Current Tasks - Shows all tasks currently running on the selected server, including those initiated in the Director UI.

-

Event Log - A historic view of all events (i.e. tasks and jobs) on the server, including those executed in Director UI.

-

Results - Shows the staged data and staged results views of all jobs run from Server Console UI, and also the results of jobs run from the Command Line with a run label.

Every user of Server Console may not necessarily need access to all these functional areas. Typical profiles could include:

-

Job User - Runs jobs only. Requires access to Scheduler and Current Tasks.

-

Quality Supervisor - View job results and analyzes issues. Requires access to Current Tasks, Event Log and Results.

1.2.2.1 Managing Server Connections

The Server menu on Server Console is used to manage connections to servers.

On start-up, Server Console is connected to the server it was launched from. It is possible to connect to more than one server at a time, and these are separated into tabs in the Server Console window.

To connect to a server:

-

On the Server menu, click Connect.

-

Click Connect. The Login dialog is displayed.

-

Enter the login credentials, and click OK.

To disconnect from a server:

-

Ensure the correct server is currently displayed (select the relevant tab).

-

On the Server menu, click Disconnect.

-

Click OK on the Disconnect dialog.

To add a new server:

-

On the Server menu, click New Server. The Add Server dialog is displayed.

-

Complete the fields, as described below:

Field Name Format Entry Alias

Free text

An alias to refer to the server in the Server Console UI.

Host

Free text

The name of the server.

Port

Numeric

The HTTP or HTTPS port to be used to connect to the server.

Secure

Check box (unchecked by default).

Used to specify whether a secure connection should be established with the server.

Path

Free text

The file path to the server.

User

Free text

The username for connecting to the server.

-

Click OK to add the server.

You can edit the details of a server that you have added.

Note:

You cannot edit the details of the server that Server Console is launched from.To edit a server:

-

Select the required server.

-

Disconnect the server (see Disconnect from a Server above).

-

On the Server menu, click Edit Server.

-

On the Edit Server dialog, alter the details of the server as required. The fields are identical to those in the Add Server dialog (as described above).

-

Click OK to save changes.

To remove a server:

Note:

You cannot remove the details of the server that Server Console is launched from.-

Select the required server.

-

On the Server menu, click Remove Server.

-

On the Remove dialog, click OK.

1.2.2.2 Scheduler

The Scheduler window is used to run one-off instances of jobs and to create or edit job schedules.

The window is divided into three areas:

-

Jobs - lists the jobs (by server, if more than one is available) that the user is authorized to run and schedule.

-

Jobs Details - displays the details of the selected job or jobs.

-

Schedules - lists the scheduled jobs.

To run a one-off job:

-

In the Jobs area, locate and double-click on the required job.

-

In the Job Details area, click the Run button. The Run dialog is displayed.

-

If available, select the required Run Profiles to override the settings for externalized configuration options in the Job.

-

Enter a Run Label to store the staged data results for the job against. You can either enter a new label, or select one from the drop-down list. Note: The drop-down list contains the last 100 used Run Labels.

-

Click OK to run the job.

The Schedule dialog is used to create and edit schedules for jobs:

To create a new schedule:

-

In the Jobs area, locate and double-click on the required job.

-

In the Job Details area, click the Schedule button. The Schedule dialog is displayed.

-

Select the Schedule type and enter the date and time details (see Schedule Types below).

-

Select the Run Profiles if required.

-

Enter a new Run Label, or select one from the drop-down list.

-

Click OK to save.

To edit a schedule:

-

In the Schedules area, locate and double-click on the required job.

-

In the Job Details area, click the Schedule button. The Schedule dialog is displayed.

-

Select the Schedule type and enter the date and time details (see Schedule Types below).

-

Select the Run Profiles if required.

-

Enter a new Run Label, or select one from the drop-down list.

-

Click OK to save.

To delete a schedule:

-

In the Schedule area, right click on the required schedule.

-

Select Delete.

-

In the Delete dialog, click Yes to delete or No to keep the schedule.

There are five schedule types available:

This option is for setting a job to run once only on a specified time and date.

-

Select Once on the Schedule dialog.

-

Enter the date and time required in the Run this job once on field. Either:

-

Change the day by clicking the up and down arrows on the right of the field:;

-

Change the day, month or year using the calendar on the right of the window; or

-

Edit the field manually, in the format shown:

dd-MMM-yyyy hh:mm.

This option is for scheduling a job to run once a day, either every day or at intervals of a specified number of days (for example, every third day or every tenth day).

-

Select Daily on the Schedule dialog.

-

In the Every field, enter the schedule frequency; for example, 1 - the job runs every day, 3 - the job runs every third day, and so on.

-

In the Server Time field, enter the time of day at which the job will run, in 24hr format.

-

In the Start Date field, enter the date of the first day of the schedule. To do this, either select the required date from the calendar on the right of the screen, or manually edit the field in the format

dd-MMM-yyyy.

This option is for scheduling a job on a weekly basis.

-

Select Weekly on the Schedule dialog.

-

In the Every area, check each day of the week on which the job will run. You can select any number of combination of days.

-

In the Server Time field, select the time at which the job will run, in 24hr format.

-

In the Start Date field, enter the date of the first day of the schedule. To do this, either select the required date from the calendar on the right of the screen, or manually edit the field in the form

dd-MMM-yyyy. Alternatively, to run the job on the first specified day of the week, clear the check box to disable the Start Date field.

This option is for scheduling a job on a specific day of a month.

-

Select Monthly on the Schedule dialog.

-

In the On drop-down list, select the day of the month.

-

In the Server Time field, select the time at which the job will run, in 24hr format.

-

Weekends are included in the schedule by default; that is, if the date selected falls on a weekend, the job will run as scheduled. To exclude weekends, check the Exclude Weekends field.

-

In the Start Date field, enter the date of the first day of the schedule. To do this, either select the required date from the calendar on the right of the screen, or manually edit the field in the form

dd-MMM-yyyy. Alternatively, to run the job on the first occurrence of the specified day of the month, clear the check box to disable the Start Date field.

This option sets the selected job to run whenever the server is started up.

1.2.2.2.1 Run Label

Run Labels are used to store results separately, where the same job is run several times either on different data sets, with different configuration options specified using Run Profiles, or simply at different times (for example, on a monthly schedule).

Run Labels are used in the Server Console application. The staged data results from a job are written out and stored by Run Label, and Server Console users can navigate through the Results in the Results window.

When running jobs in the Server Console UI, a Run Label must be specified. If a previously used Run Label is reused for the same job, the previously written results for that Job and Run Label combination will be overwritten.

Run Labels are not used when running jobs interactively in the Director UI. Results from these interactively run jobs are not visible in the Server Console UI, as they are assumed to be run during project design and testing rather than in production.

This also means that a results book export in a job will produce expected results when run from Director without a run label but when the same job is run using a run label then no results are exported as results book data is not generated when a run label is used. The 'results' that are not visible when run labels are used include things such as drilldowns and results books.

1.2.2.3 Current Tasks

The Current Tasks window shows all tasks currently running on the selected server, including those initiated in the Director UI.

It is divided into two areas:

-

Current Tasks area

-

Task Filter

This area displays the details of the tasks in progress. You can drill down into these tasks to check their status and progress by expanding each of the groups using the + button.

This area filters the details of the tasks currently running on the selected server.

The elements of this area are as follows:

Table 1-6 Task Filter Elements

| Element | Description |

|---|---|

|

Show by |

Sorts the contents of the Current Task area by Jobs (the default option) or Run Label. |

|

Auto Expand |

Check this box to automatically expand the contents listed in the Current Task area. This box is cleared by default. |

|

Projects |

The projects of the tasks being run. |

|

Jobs |

The names of the jobs being run. |

|

Labels |

The labels of the jobs being run. |

This dialog box is used to view the tasks currently running on all connected servers.

To open the popup, click View > Current Tasks Popup.

Note that the Current Tasks window shows all activity on all connected servers, including tasks and jobs that are run interactively using the Director UI, and any jobs that have been instigated externally using the Command Line Interface.

1.2.2.4 Event Log

The Event Log provides a complete history of all jobs and tasks that have run on an EDQ server.

By default, the most recent completed events of all types are shown in the log. However, you can filter the events using a number of criteria to display the events that you want to see. It is also possible to tailor the Event Log by changing the columns that are displayed in the top-level view. Double-clicking on an event will display further information where it is available.

The displayed view of events by any column can be sorted as required. However, older events are not displayed by default, so a filter must be applied before sorting before they can be viewed.

An event is added to the Event Log whenever a Job, Task, or System Task either starts or finishes.

Tasks are run either as part of Jobs or individually instigated using the Director UI.

The following types of Task are logged:

-

Process

-

Snapshot

-

Export

-

Results Export

-

External Task

-

File Download

The following types of System Tasks are logged:

-

OFB - a System Task meaning 'Optimize for Browse' - this optimizes written results for browsing in the Results Browser by indexing the data to enable sorting and filtering of the data. The 'OFB' task will normally run immediately after a Snapshot or Process task has run, but may also be manually instigated using the EDQ client by right-clicking on a set of Staged Data and selecting Enable Sort/Filter, or by a user attempting to sort or filter on a non-optimized column, and choosing to optimize it immediately.

-

DASHBOARD - a System Task to publish results to the Dashboard. This runs immediately after a Process task has been run with the Publish to Dashboard option checked.

If the Director UI is connected to multiple servers, you can switch servers using the Server drop-down field in the top-left hand corner.

If Server Console UI is connected to multiple servers, select the required server in the tab list at the top of the window.

The following filtering events are available:

Quick filter options are made available to filter by Event Type, Status and Task Type. Simply select the values that you want to include in the filter (using Control - Select to select multiple items) and click on the Run Filter button on the bottom left of the screen to filter the events.

Further free-text filtering options are available to filter by Project Name, Job Name, Task Name and User Name. These are free-text so that you can enter partial names into the fields. You can enter a partial name into any of these fields - provided the object contains the partial name, it will be displayed (though note that matching is case-sensitive). For example, if you use a naming convention where all projects working on live systems have a name including the word 'Live' you can display all events for live systems.

Note:

The Project Name column is not displayed by default. To change the view to see it, click on the Select Columns button on the left hand side, and check the Project Name box.The final set of filters, on the right-hand side of the screen, allow you to filter the list of events by date and time. A Date picker is provided to make it easier to specify a given date. Note that although only the most recent events are shown when accessing the Event Log, it is possible to apply filters to view older events if required.

Note:

Events are never deleted from the history by EDQ, though they are stored in the repository and may be subject to any custom database-level archival or deletion policies that have been configured on the repository database.Events may be filtered by their start times and/or by their end times. For example, you can apply a filter to see all Jobs and Tasks (but not System Tasks) that completed in the month of November 2008.

To change the set of columns that are displayed on the Event Log, click the Select Columns button on the top left of the Event Log area. The Select Columns dialog is displayed. Select or deselect the columns as required, and click OK to save or Cancel to abandon the changes. Alternatively, click Defaults to restore the default settings.

Note that Severity is a rarely used column - it is currently set to 50 for tasks or jobs that completed correctly, and 100 for tasks or jobs that raised an error or a warning.

Double-clicking to open an event will reveal further detail where it is available.

Opening a Task will display the Task Log, showing any messages that were generated as the task ran.

Note:

Messages are classified as INFO, WARNING, or SEVERE. An INFO message is for information purposes and does not indicate a problem. A WARNING message is generated to indicate that there could be an issue with the process configuration (or data), but this will not cause the task to error. SEVERE messages are generated for errors in the task.For Jobs, if a notification email was configured on the job, the notification email will be displayed in a web browser when opening the completed event for the Job. Jobs with no notifications set up hold no further information.

Exporting Data from the Event Log

It is possible to export the viewable data in the Event Log to a CSV file. This may be useful if you are in contact with Oracle Support and they require details of what has run on the server.

To export the current view of events, click Export to CSV. This will launch a browser on the client for where to write the CSV file. Give the file a name and click Export to write the file.

1.2.2.5 Results

The Results window shows the staged data and staged results views of all jobs run from Server Console UI, and also the results of jobs run from the Command Line with a run label.

The window is divided into the Job History and Results Browser areas.

This area lists the jobs run in chronological order. It shows the Project, job, Run Label and end time for each one.

This area shows the details of the job selected in the Job History area above.

The Results Browser has various straight-forward options available as buttons at the top - hover over the button to see what it does.

However, there are a few additional features of the Results Browser that are less immediately obvious:

It is often useful to open a new window with the results from a given job. To do this, right-click on a job in the Job History area and select Open in a new window.

On occasion, you might see unusual characters in the Results Browser, or you might encounter very long fields that are difficult to see in their entirety.

For example, if you are processing data from a Unicode-enabled data store, it may be that the EDQ client does not have all the fonts installed to view the data correctly on-screen (though note that the data will still be processed correctly by the EDQ server).

In this case, it is useful to inspect the characters by right-clicking on a character or a string containing an unusual character, and selecting the Show Characters option. For example, the Character Profiler processor may work on some Unicode data, with a multi-byte character selected where the client does not have the required font installed to display the character correctly. The character therefore appears as two control characters.

If you right-click on the character and use the Show Characters option, EDQ can tell you the character range of the character in the Unicode specification.

The Show Characters option is also useful when working with very long fields (such as descriptions) that may be difficult to view fully in the Results Browser.

The Full column widths button Full column widths button will widen the columns to show the full data, but in this case there is too much data to show on the width of a screen. To see the FullDescription field as wrapped text, it is possible to right-click on the rows you want to view and use the Show Characters option. You can then click on the arrow at the top-right of the screen to show each value in a text area, and use the arrows at the bottom of the screen to scroll between records.

Clicking on the column header in the Results Browser will sort the data by that column. However, if you control-click on the column header (hold down the Ctrl key and click on the header), you can select all the visible (loaded) data in that column in the Results Browser. This is useful for example to copy all loaded rows, or to use them to create or add to reference data using the right-click option. Note that the Results Browser only loads 100 records by default, so you may want to use the Load All Data button before selecting the column header.

Multiple column headers can be selected in the same way.

To purge results in Server Console, right click on a Record in the Job History area.

Results can be purged by project, run label or job.

1.2.2.6 Result Purge Rules

Rules to automatically purge results under certain conditions can be set in Server Console using the Result Purge Rules dialog.

To open the dialog, select Tools > Purge Rules in the Server Console menu bar.

Note:

Rules are applied in the order shown in this dialog, from the top down. See Setting the Rule Order for further information.To add a rule:

-

Click the Add Rule button on the Result Purge Rules dialog. The New Rule dialog is displayed.

-

The Enabled checkbox is checked by default. To create a new rule without enabling it immediately, uncheck it.

-

Complete the fields as follows:

-

Name - Enter a name for the rule. This field is mandatory.

-

Purge results after - Specify the number of hours, days, weeks or months after which the results should be purged. Enter the number in the free-text field, and select the unit of time from the drop-down list. Alternatively, select "never" to ensure that results matching the rule criteria will not be purged. This field is mandatory.

-

Project - If required, select a specific project for the rule.

-

Job - If required, select a specific job for the rule.

-

Run Label - Either specify an exact Run Label (by entering it, or selecting it from the drop-down list), or enter a regular expression in the Regex field to capture Run Labels containing specific terms. For example, the Regex .*test.* would capture all Run Labels containing the word "test".

-

-

Click OK to save the new rule, or Cancel to abandon.

To edit a rule:

-

Either double click the rule, or select it and click the Edit Rule button. A dialog with all the details of the rule is displayed.

-

Edit the fields as required.

-

Click OK to save the changes, or Cancel to abandon.

To enable or disable a rule, uncheck the Enabled check box next to it. This checkbox can also be edited when the rule is opened for editing.

To delete a rule, select it in the Result Purge Rule dialog and click the Delete Rule button.

If a rule is deleted in error, click Cancel to close the dialog, not OK. On reopening, the accidentally deleted rule will be present again.

There are four buttons on the bottom right of the Result Purge Rule dialog for changing the order of the rules.

To move a rule, select it then:

-

click the Move rule to top button to move it to the top of the list;

-

click the Move rule up button to move it one place up the list;

-

click the Move rule down button to move to one place down the list; or

-

click the Move rule to the bottom button to move it to the bottom of the list.

1.2.3 Dashboard

The Dashboard provides a high-level view of results, in the form of Indexes, Summaries or by Rules. These are collectively known as elements. See the Dashboard Elements topic for further information.

Dashboard Administration is used to control user access to the Dashboard and to configure the Indexes, Summaries and Rules. The My Dashboard view is subdivided into Indexes, Summaries and Rules areas.

You can drill down through Indexes and Summaries by clicking on the name of the element. Alternatively, select an element and click the Graph icon on the right for a graphical view.

Note:

The contents of the window depend on the permission level of the user viewing it.Each Rule is followed by the name of the Summary it is contained within. Click this name to display all the Rules within that Summary.

To move an element within its area, select it and click the Up and Down arrows in the toolbar of the area.

To remove an element, select it and click the cross at the top of the area.

Adding an Index or Summary to the View

If there are Indexes or Summaries that have yet to be added, the following drop-down box is displayed on the top-left of the window.

Select the required Index or Summary and click Add.

To add rules to the view:

-

Click on a Summary. A full list of Rules within the Summary is displayed.

-

Select the Rule and click the pin button.

-

Repeat as required for other rules.

1.2.3.1 Dashboard Elements

The My Dashboard view is comprised of Elements, each of which has a Status derived from the results of the Element.

A Dashboard Element is a line item of data quality information that a user can monitor on the Dashboard. There are four types of Dashboard Element:

-

Indexes - a calculated value derived from a weighted set of Rule Results, tracked over time.

-

Summaries - a summary of the statuses of a number of Rule Results

-

Real Time Aggregations - an aggregation of the results of a Real Time Rule over a specified time period

-

Rule Results - published results from a processor in EDQ

Indexes, Summaries and Real Time Aggregations are three different ways of aggregating Rule Results, which may be generated either in Batch or Real Time.

An index is a type of dashboard element with a single numeric value, representing the aggregated results of a number of measures of data quality (Rule Results). The contributing measures are weighted to form an index across all chosen measures. Indexes are used for trend analysis in data quality, in order to track the data quality in one or many systems over a period of time. See the Dashboard Indexes topic for further information.

A Summary is a type of dashboard element that aggregates a number of Rule Results into a summarized view showing the number of rules of each status (Red, Amber and Green).

Summary dashboard elements are created directly whenever publishing rule results from an EDQ process (where a summary is created summarizing all the rule results that are published from the process), or they may be configured manually by a dashboard administrator. Where configured by an administrator, the Summary may aggregate results from a number of different processes, and if required, across a number of different projects.

Note that unlike all other types of dashboard element, Summaries do not support trend analysis. This is because the Rule Results that comprise the summaries may be changed over time, and may be published at different times.

A Real Time Aggregation is a type of dashboard element that aggregates a single Real Time Rule Result dashboard element into a set of results for a different (normally longer) time period. Real Time Rule Results are published by processes that run in interval mode - normally continuously running processes. Intervals may be written on a regular basis so that EDQ users can see results on a regular basis - for example every hour, or every 100 records. However, it may be that Executives or other users may want to monitor results on a daily or weekly basis. This can be achieved by configuring a Real Time Aggregation and making this element available to users rather than the underlying Real Time Rule Results.

Rule Results are dashboard elements that directly reflect the results of an EDQ processor that is configured to publish its results to the Dashboard. Rule Results are therefore the most granular (lowest level) type of dashboard element.

Rule Results may be either Periodic (published from batch processes), or Real Time (published from real time processes that run in interval mode). The following icons are used to represent the different types of Rule Results in the Dashboard Elements pane of the Dashboard Administration window:

-

Periodic Rule Results

-

Real Time Rule Results

1.2.3.2 Dashboard Administration

Dashboard Administration allows an administrator to configure:

-

the users who have access to published results;

-

the way that published results are aggregated, into Summaries, Indexes and Real Time Aggregations; and

-

the way that the status of each item on the Dashboard is calculated

It is also possible to delete items from the Dashboard, and to purge the results of published items.

Note:

Deleting an item from the Dashboard does not stop the underlying processor from publishing results in the future. Deleted items will be recreated in the Dashboard when the process next runs with 'Publish to Dashboard' enabled.See Dashboard Elements for further information on the terms and concepts used in Dashboard Administration.

Accessing Dashboard Administration

To access Dashboard Administration:

-

Open the Dashboard either from the Launchpad and log in as an administrator, or by right-clicking on a server in EDQ and selecting Display Dashboard.

-

Click the Administration button on the Dashboard front page.

This will start the Dashboard Administration, a Java Webstart application.

The Dashboard Administration GUI

There are two Views available on the Dashboard Administration GUI - Dashboard and Default Thresholds. These appear in the left-hand column:

-

The Dashboard View allows you to administer all published results.

-

The Default Thresholds View allows you to change the default way in which the status of Dashboard Elements of each type is calculated. The Default Thresholds can be over-ridden for specific Dashboard Elements if required.

1.2.3.2.1 Dashboard View

The Dashboard view of the Dashboard Administration dialog is divided into three panes as follows:

-

Dashboard Elements - a complete list of all Dashboard Elements, including configured aggregations, organized by their aggregation type.

-

Audits & Indexes - a list of published Dashboard Elements, and configured Indexes.

-

User Groups - a list of the configured User Groups, and the Dashboard Elements to which they have access.

The Dashboard Elements section shows all Dashboard Elements, organized by their aggregations. Note that all published results are aggregated, because new results are aggregated into a default Summary based on the EDQ process from which they were published. These Summaries always appear in the Dashboard Elements section even if they are not associated with any User Groups and so will not appear on any user dashboards. The same Rule Results may be listed under several aggregations.

The three types of aggregation are Indexes, Summaries and Real Time Aggregation.

Use the Dashboard Elements pane to create new aggregations of results, as follows:

To create a new index, click New Index at the bottom of the Dashboard Elements pane, and give the index a name, as you want it to appear on users' dashboards. For example an index to measure the quality of customer data might be named 'Customer DQ'.

To add rule results to the index, drag and drop Rule Results from either the Dashboard Elements pane or the Audits & Indexes pane. Note that if you drag a Summary onto the index, all the Rule Results that make up the summary are added to the index.

It is also possible to add other indexes to the index, to create an index of other indexes. To see how this will affect the index calculation, see Dashboard Indexes.

Once you have added all the contributing rules and/or other indexes, you can configure the weightings of the index. By default, all contributing rules/indexes will be equally weighted, but you can change this by right-clicking on the index and selecting Custom Weightings.

To change the weightings, change the weighting numbers. The percentage weighting of the contributing rule or index will be automatically calculated. For example, you can configure an index with six contributing rules. The Address populated rule is given a weighting of 2 (that is, it is weighted twice as strongly as the other rules):

|

Address populated |

2 |

28.57 |

|

Contact number populated |

1 |

14.29 |

|

Contact preferences populated |

1 |

14.29 |

|

Email address populated |

1 |

14.29 |

|

Mobile number populated |

1 |

14.29 |

|

Name populated |

1 |

14.29 |

If required, you can also change the way the status of the index (Red, Amber or Green) is calculated. Otherwise, the status of the index will be calculated using the rules expressed in the Default Thresholds section.

To change the way the status is calculated for this index only, right-click on the index and select Custom Thresholds. For example, you could configure a particular index to have a Red (alert) status whenever it is below 800 and an Amber status whenever it is below 700.

When you have finished configuring the index, you must choose which User Groups you want to be able to monitor the index. To do this, simply drag and drop the index on to groups in the User Groups pane. Users in those Groups will now be able to use the Customize link on the Dashboard to add the new index to their Dashboards.

To create a new summary, click on the New Summary button at the bottom of the Dashboard Elements pane, and give the summary a name, as you want it to appear on users' dashboards. For example a summary of all product data rules might be called Product Data.

To add rule results to the summary, drag and drop Rule Results from either the Dashboard Elements pane or the Audits & Indexes pane. Note that if you drag another summary onto the new summary, all the contributing rule results of the summary that you dragged will be added to the new summary.

If required, you can also change the way the status of the summary (Red, Amber or Green) is calculated. Otherwise, the status of the summary will be calculated using the rules expressed in the Default Thresholds section.

To change the way the status is calculated for this summary only, right-click on the summary and select Custom Thresholds. For example, you could configure a particular summary to have a Red (alert) status whenever 5 or more contributing rules are Red or when 10 or more contributing rules are Amber, and to have an Amber (warning) status whenever 1 or more contributing rules are Red or when 5 or more contributing rules are Amber.

When you have finished configuring the summary, you must choose which User Groups you want to be able to monitor the summary. To do this, simply drag and drop the summary on to groups in the User Groups pane. Users in those Groups will now be able to use the Customize link on the Dashboard to add the new summary to their Dashboards.

Creating a Real Time Aggregation

To create a new Real Time Aggregation, drag and drop a Real Time Rule Results dashboard element from the Audits & Indexes pane to the Real Time Aggregations node in the Dashboard Elements pane.

Real Time Rule Results are indicated by a globe icon.

You will be prompted to save before specifying the details of the Real Time Aggregation. For example, to create a daily aggregation of a real time rule that validates names, you might specify the following details:

Name: Name Validation (Daily) Aggregation settings Start date: 23-Jan-2009 00:00 Results by: Aggregate by time period: 1 days.

If a time period is specified, the Real Time Aggregation will include rule results for each completed interval that falls within the specified time period. (It will normally make sense to use a 'round' start time, such as midnight, for daily aggregations, and the beginning of an hour for hourly aggregations.)

If a number of intervals is specified the Real Time Aggregation will include rule results for the stated number of intervals starting from the stated start date and time.

In both cases, rule results are simply added up, so for example the number of Alerts for the aggregation will be the number of alerts summed across all included intervals.

If required, you can also change the way the status of the real time aggregation (Red, Amber or Green) is calculated. Otherwise, the status will be calculated using the rules expressed in the Default Thresholds section.

To change the way the status is calculated for this real time aggregation only, right-click on the aggregation and select Custom Thresholds. For example, you could configure a particular aggregation to have a Red (alert) status if 10% or more of the checks performed are alerts.

The Audits & Indexes pane shows all directly published Rule Results organized by 'Audits'; that is, the EDQ processes under which they were published, and all configured Indexes.

Drag Rule Results from this pane to the Dashboard Elements pane to create new aggregations of results.

To purge the data from an audit or an index, right click on the element in the Audits & Indexes pane and select Purge.

All the data that has been published to that element will be purged from the Dashboard. The results stored in EDQ will be unaffected.

The changes will not be made permanent until you save them in Dashboard Administration.

To delete an element from the list in the Audits and Indexes pane, right click on the element in the Audits & Indexes pane and select Delete.

The element will be deleted from the Dashboard and from Dashboard Administration. The changes will not be made permanent until you save them in Dashboard Administration.

Note:

Deleted elements will be reinstated if the process that published them is re-run with the 'Publish to Dashboard' option enabled. However, customizations that have been made in Dashboard Administration, such as custom thresholds, will not be re-created.The User Groups pane shows all configured User Groups, and the dashboard elements to which they have been granted access. To grant a group access to view a dashboard element, simply drag it from the Dashboard Elements pane on to the Group name.

Note that the actual dashboard elements that appear on a user's dashboard are configurable by the users themselves. Users can log in and click on the Customize link to change which dashboard elements they want to monitor.

1.2.3.2.2 Default Thresholds View

To change the default way in which statuses are calculated for each type of dashboard element, use the Default Thresholds view.

A tab exists for each type of dashboard element - Rules, Summaries, Indexes and Real Time Rules.

In all cases, the status of a dashboard element will be Green unless one of the stated threshold rules is hit. Otherwise, rules are applied on an OR basis. That is, if you have several rules in the Red section of the screen, the status of a dashboard element will be Red if any of these rules applies.

Note that the default thresholds may be overridden for any specific dashboard element by configuring custom thresholds in the Dashboard Elements section.

1.2.3.3 Dashboard Indexes

Indexes aggregate Rule Results, though it is also possible to aggregate indexes hierarchically to create an index of indexes. For example, a data quality index could be constructed for each of a number of source systems, or each of a number of types of data (customer, product etc.). An overall data quality index could then be constructed as an aggregation of these indexes.

Indexes are always configured in Dashboard Administration.

The index value means little in isolation. However, as the score is calculated from the results of a number of executions of a process or processes (over time), trend analysis will allow the business user to monitor whether the index has gone up or down. This is analogous to monitoring the FTSE100 index.

A higher index value represents a higher data quality score. By default, a 'perfect' DQ index score is 1000.

Where an index is made up of a number of Rule Results, it is calculated as a weighted average across the contributing results.

For example, a Customer Data DQ index may be made up of the following Rule Results and Weightings:

Table 1-8 Rule Results and Weightings

| Contributing Rule | Weighting |

|---|---|

|

Validate email address |

12.5% |

|

Validate address |

25% |

|

Title/gender mismatches |

37.5% |

|

Validate name |

25% |

In this configuration, the Validate address and Validate name rules have the default weighting of 25% (a quarter of the overall weight across four rules), but the administrator has specified different weightings for the other rules – the Validate email address rule is interpreted as less important, and the Title/Gender mismatch as more important.

The actual index score is then calculated as a weighted average across internally calculated index scores for each contributing rule.

For each rule, an index score out of 1000 (or the configured base perfect score) is calculated as follows, where 10 points are awarded for a pass, 5 points for a warning, and no points are awarded for an alert:

(((# of passes * 10) + (# of warnings * 5)) / (# of checks *10)) * 1000

For example, if the results of the contributing rules are as follows:

Table 1-9 Results of Contributing Rules

| Rule | Checks | Passes | Warnings | Alerts |

|---|---|---|---|---|

|

Validate email address |

1000 |

800 (80%) |

100 (10.0%) |

100 (10.0%) |

|

Validate address |

1000 |

800 (80%) |

0 (0%) |

200 (20.0%) |

|

Title/gender mismatches |

1000 |

800 (80%) |

0 (0%) |

200 (20.0%) |

|

Validate name |

1000 |

800 (80%) |

0 (0%) |

200 (20.0%) |

The index scores of each contributing rule will be as shown below:

| Rule | Index Score Calculation | Index Score |

|---|---|---|

|

Validate email address |

800 passes * 10 points = 8000 + 100 warnings * 5 points = 500 Total = 8500 1000 checks * 10 = 10000 8500/10000 = 0.85 * 1000 = 850 |

850 |

|

Validate address |

800 passes * 10 points = 8000+ 0 warnings * 5 points = 0 Total = 8000 1000 checks * 10 = 10000 8000/10000 = 0.8 * 1000 = 800 |

800 |

|

Title/gender mismatches |

800 passes * 10 points = 8000+ 0 warnings * 5 points = 0 Total = 8000 1000 checks * 10 = 10000 8000/10000 = 0.8 * 1000 = 800 |

800 |

|

Validate name |

800 passes * 10 points = 8000+ 0 warnings * 5 points = 0 Total = 8000 1000 checks * 10 = 10000 8000/10000 = 0.8 * 1000 = 800 |

800 |

The overall index score is then calculated using the weightings, as follows:

Validate email address score (850) * Validate email address weight (0.125) = 106.25 + Validate address score (800) * Validate address weight (0.25) = 200 + Title/gender mismatch score (800) * Title/gender mismatch weight (0.375) = 300 + Validate name score (800) * Validate name weight (0.25) = 200

The total Customer Data DQ index score is 806.25, and is rounded up to 806.3 for display purposes.

If an index is created to aggregate other indexes, the index is calculated simply as a weighted average of the contributing indexes. For example, the user might set up an index across a number of other indexes as follows:

Table 1-11 Contributing Indexes

| Contributing Index | Weighting |

|---|---|

|

Customer data index |

50% |

|

Contact data index |

25% |

|

Order data index |

25% |

If the index values of each indexes are as follows:

Table 1-12 Weighted Average of Contributing Indexes

| Contributing Index | Index Score |

|---|---|

|

Customer data index |

825.0 |

|

Contact data index |

756.8 |

|

Order data index |

928.2 |

The index would be calculated as follows:

Customer data index (825) * Customer data index weight (0.50) = 412.5 + Contact data index (756.8) * Contact data index weight (0.25) = 189.2 + Order data index (928.2) * Order data index weight (0.25) = 232.5

The overall data quality index would have a value of 834.2.

Indexes of Staggered Audit Results

Indexes may aggregate results from a number of processes. Normally, it is expected that this form of aggregation will be used when the processes are executed at the same intervals. However, this cannot be guaranteed. In some cases, the processes contributing to an index will be out of step. For example, two data quality audit processes are executed. An index is configured to aggregate rule results from both processes, and results for the index history are published as follows:

Table 1-13 Results for Index History

| Date | Results from Customer audit process run on | Results from Contact audit process run on |

|---|---|---|

|

12/06/05 |

12/06/05 |

12/06/05 |

|

13/06/05 |

13/06/05 |

12/06/05 |

|

14/06/05 |

13/06/05 |

14/06/05 |

|

15/06/05 |

15/06/05 |

14/06/05 |

|

16/06/05 |

16/06/05 |

16/06/05 |

This works by recalculating the results for the index every time one of its contributing processes is run. The results from the last run of each process are then used, and any previously calculated index results for a distinct date (day) are overwritten.

1.2.4 Match Review

Match Review is used to review possible matches identified by Batch or Real-Time Matching processes in Director. It is accessed via the Enterprise Data Quality Launchpad.

On launch, the Match Review Summary window is displayed. However, no content is displayed until an item in the Review area on the left of the window is selected.

The Summary window is then populated with the details of the selected review. For further information, see the Match Review Summary Window topic.

Match Review Application Window

1.2.4.1 Match Review Summary Window

The areas of the window are described below.

Contains the name of the currently selected Review, a status bar showing the percentage of assigned review groups completed, and a direct link to launch the Review Application.

Displays all the Reviews currently assigned (fully or partially) to the user.

This area breaks down the records by their matched status:

-

Automatic Match

-

Match

-

No Match

-

Possible Match

-

Pending

This area shows the records by Review Type:

-

Awaiting Review

-

User Reviewed

-

No Review Required

Note:

The number displayed in No Review Required will always be equal to the Automatic Match value in the Matching Status area.This area displays every rule triggered during the Matching process, and the number of resolved and unresolved relationships identified by each rule.

1.2.4.2 Match Review Application Window

The Review Application is launched by clicking either:

-

the Launch Review Application in the Title Bar; or

-

any of the links in the areas of the Summary window.

Note:

Users are often assigned to review matches by rule, and therefore would click on the required rule in the Rules area. Alternatively, if the number of possible matches is relatively low, they may view all of them by clicking Possible Matches in the Matching Status area.This window is divided into the following areas:

This table describes the toolbar items:

The fields in this area are used to search for specific groups using filtering criteria. See Filtering Groups for further information.

Records and Relationships Area

The Records area shows the records within the currently selected Review Group. Records that match are highlighted in yellow, records that are flagged for review are highlighted in mauve, and the currently selected record is always highlighted in blue.

The Relationships area shows the relationships between each record in the group, and indicates where there is a Match or a Possible Match.

So, in the example below, there are three records: R1, R2 and R3. The Relationship area shows that R1 is automatically matched with R2, and that there is a possible match between R1 and R3:

The Review Merged Output tab is divided into two areas:

-

Records: The matched records in the currently selected Review Group.

-

Merged Output: How the records will appear when merged.

1.2.4.3 Filtering Groups

The Filter Groups area is used to narrow down the groups displayed. Groups can be filtered by:

-

searching for a text value in record attributes; or

-

searching for specific review criteria; or

-

both.

The following table describes the user interface elements in this area:

| Item | Type | Description |

|---|---|---|

| Look For | Free-Text Field | Enter the text to search for in the Record attributes. |

| Search In | Drop-Down Field | Select the Record attribute to search within. |

| Relationship Attribute | Drop-Down Field | Select the Relationship criteria to search by. |

| Operator | Drop-Down Field | Select the required operator. Most searches will use = (Equals) or <> (Does not equal). |

| Relationship Value | Varies | Select the Relationship Value to search for. Depending on the Relationship Criteria selected, this will either be a drop-down, date selection or free-text field. |

| Find | Button | Run the filter. |

| Clear | Button | Clear all the filter fields. |

|

Use OR logic |

Checkbox | When selected, the records and relationships field filters are run separately, i.e. the groups displayed meet the criteria specified in either. If deselected, the filter results will match all the criteria specified in both records and relationships filter fields. The checkbox is selected by default. |

|

Case Sensitive |

Checkbox | Enables case-sensitive filtering of records based on any values set in the free-text fields. Not checked by default. |

|

Exact Match |

Checkbox | When checked, only records exactly matching all the filter criteria specified are returned. Not checked by default. |

To search for an individual by family name:

-

In the Look For field, enter the family name, for example Williams.

-

Select Family Name in the Search In drop-down field.

-

Decide whether to search for the exact name or using case sensitivity, and check or clear the Case Sensitive and Exact Match boxes accordingly.

-

Click Find. The first group found is displayed in the Records and Relationships areas.

To search for groups by an individual family name and by a Match Rule name:

-

In the Look For field, enter the family name.

-

Select Family Name in the Search In drop-down field.

-

In the Relationship Attribute field, select the Match Rule Name.

-

Leave the Operator field set to =.

-

In the Relationship Value field, select the rule name, for example Exact Name, Postcode.

-

Ensure the Use OR logic box is checked, Check or clear the Case Sensitive and Exact Match boxes as required.

-

Click Find. The first group found is displayed in the Records and Relationships areas.

-

Navigate through groups returned using the Group Navigation buttons in the task bar.

1.2.4.4 Reviewing Groups

The Match Review application allows users to review particular types of group by status, by the rule they trigger, or even by specific filter criteria.

In the Match Review Summary window, either:

-

click Launch Review Application in the Title Bar to view all Groups; or

-

click a link in the Matching Status, Review Status or Rules areas to view the Groups that fall within the category selected.

Note:

Most users will either need to view all Possible Matches (by clicking that link in the Matching Status area) or by Rule.

Alternatively, a user can search for Groups that fall within specific criteria relating to the Records within the groups or the Relationships between them. See the Filtering Groups topic for more information.

When the Groups are displayed, the user can navigate through them using the Group Navigation buttons in the toolbar of the Review Application.

To apply a decision, use the following procedure:

-

Review the information in the Records area. If necessary, click Highlight Differences to show where the Record Attributes differ.

-

In the Relationships area, select the required setting in the drop-down Decision field for each possible relationship. The options are:

-

Possible Match

-

Match

-

No Match

-

Pending

Whatever option is selected, the Relationship is updated with the name of the user applying the decision, and the time the decision is made.

-

-

If required, proceed to the next group using the Group Navigation buttons.

It is possible to add a comment to a Relationship, whether or not a decision has been made regarding it. The procedure varies depending on whether it is the first comment made or not

To add the first comment to a Relationship:

-

Click the Add First Comment button (

) next to the Relationship.

) next to the Relationship. -

In the Comment dialog, enter the text required.

-

Click OK to save (or Cancel to abandon).

-

The Comments dialog is displayed, showing the comment, the name of the user leaving the comment, and the date the comment was made.

-

Click OK to close the dialog. Alternatively, select the comment and click Delete to remove it, or click Add to add an additional comment.

To add an additional comment to a Relationship:

-

Click the Add Additional Comment button (

) next to the Relationship.

) next to the Relationship. -

In the Comments dialog, click Add.

-

In the Comment dialog, enter the text required.

-

Click OK to save (or Cancel to abandon).

-

The Comments dialog is displayed, showing the comment, the name of the user leaving the comment, and the date the comment was made.

-

Click OK to close the dialog. Alternatively, select the comment and click Delete to remove it, or click Add to add an additional comment.

1.2.4.5 Reviewing Merged Groups

Once Records within Groups are identified as matches, they are merged together. It is then possible to review the results of the merge and, if necessary, override the way in which the merged output record has been generated.

Manual decisions made that override merged output are stored against a hash of the match group, and will be retained as long as the set of records in the match group stays the same. A group is marked as Confirmed in the UI if the Review Group from which the Match Group has been derived is fully resolved (for example, all Review relationships have been marked as either Match or No Match). Unless the source data or the match rules change, the set of records being merged is likely to stay the same and the manual overrides will apply.

When overriding output, values in the candidate records in the group (the set of records being merged) can be selected as the output attribute by right-clicking on the value and selecting the merged output field to populate with the value. Alternatively, it is possible directly to override the output value by typing into the merged output field.

Any errors in automatic merged output generation are highlighted to the user in the UI. An error indicates that a manual decision for the output field is required.

Note: